Cloud-Native Meets High Performance

We combine the strength of high-performance computing (HPC) with the agility of cloud-native and serverless technologies. Our solutions are designed to tackle the challenges of modern scientific research—enabling faster simulations, complex data analysis, and efficient workflows further optimized with scalable data models.

Customers

Broad Institute

We partnered with the Broad Institute to implement cutting-edge scientific computing workflows, enabling researchers to process genomic data at unprecedented speeds and drive advancements in precision medicine.

US Census Bureau

Our solution empowered Census Bureau statisticians to process and analyze vast amounts of Census data efficiently, improving accessibility and enabling faster decision-making.

Moderna

We collaborated with Moderna to accelerate drug discovery using scientific computing solutions, optimizing processes to identify potential treatments faster and more effectively.

World Health Organization (WHO)

For the WHO, we developed robust virus surveillance programs that leverage scientific computing to monitor and track virus patterns globally, aiding in early detection and response efforts.

Scientific Computing in Any Cloud Environment

We help you to harness the power of any cloud platform—AWS, Azure, or Google Cloud Platform (GCP)—to accelerate your scientific research and computing needs. Our solutions are designed to adapt to your preferred cloud provider, ensuring flexibility and scalability.

Amazon Web Services (AWS)

We often use Lambda for serverless HPC workflows, S3 for scalable storage, and SageMaker for machine learning, among other services.

Microsoft Azure

We leverage Azure Functions for serverless computing, Blob Storage for large-scale data, and Azure ML for AI solutions, alongside other tools

Google Cloud Platform (GCP)

We commonly utilize Cloud Functions for serverless HPC, BigQuery for analytics, and Vertex AI for machine learning, in addition to other resources.

Revolutionizing Research with HPC Solutions

Solid Logix leverages cutting-edge scientific computing to solve complex challenges in research and development. Our expertise enables organizations to scale their efforts and achieve impactful breakthroughs in data-driven fields.

Applications of Scientific Computing

Scientific computing empowers groundbreaking research and real-world applications by processing and analyzing massive datasets efficiently.

Seismic Simulation

Leverage HPC to model seismic events, aiding in infrastructure design, risk assessment, and disaster preparedness.

Geospatial Analysis

Process and analyze spatial data for applications in urban planning, resource management, and disaster response using GIS and HPC solutions.

Economic Forecasting

Utilize HPC to model economic systems and forecast market trends, empowering policymakers and financial institutions.

Genomic Research

Analyze genomic sequences at scale, enabling advancements in personalized medicine, drug development, and disease prevention.

Climate Modeling

Run simulations to predict weather patterns, assess climate risks, and study environmental changes.

Drug Discovery

Model molecular interactions and analyze massive chemical libraries to accelerate drug candidate identification.

Physics and Astronomy

Process astronomical data and simulate physical phenomena to uncover new insights about the universe.

Financial Modeling

Perform complex financial simulations and risk assessments to inform investment strategies and economic policies.

Robotics and Automation

Develop and test algorithms for autonomous systems, enhancing capabilities in robotics and industrial automation.

Geospatial Analysis

Analyze spatial data to support urban planning, environmental monitoring, and disaster response.

Advancing Science with High-Performance Computing

HPC accelerates scientific breakthroughs by enabling massive parallel processing, rapid simulations, and data-driven insights.

Benefits of Our Approach

Use Cases and Technologies

Key Technologies: Apache Spark on EMR, GPU instances, AWS Glue.

Key Technologies: EMR with Hadoop, AWS Lambda, S3 and Glacier.

Key Technologies: GPU instances, machine learning models, S3.

Key Technologies: EMR with Apache Spark, S3 and Redshift, AWS Batch.

Key Technologies: EMR for IoT data, SageMaker, CloudWatch.

Key Technologies: EMR, Athena, Lambda.

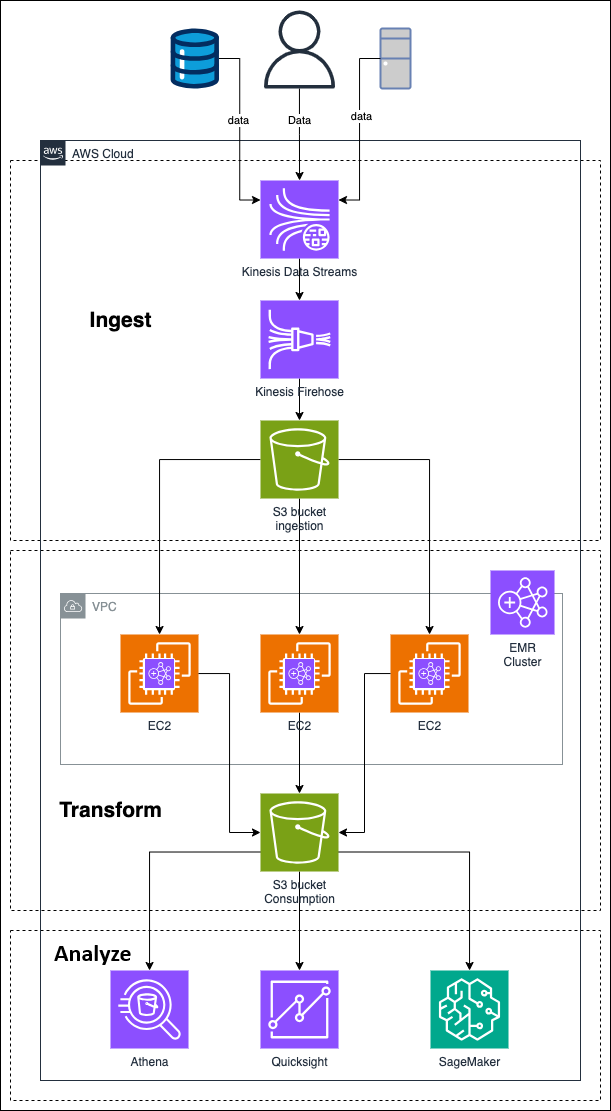

An example of how we harnessed the power of AWS.

We specialize in scientific computing solutions that combine high-performance computing (HPC) with the flexibility and scalability of AWS. By leveraging cutting-edge technologies like EMR clusters and serverless computing, we enable researchers and organizations to process massive datasets, run complex simulations, and achieve breakthroughs faster and more cost-effectively.

AWS-Powered Scientific Workflows

1. Distributed Data Processing with EMR Clusters

Our solutions use AWS Elastic MapReduce (EMR) to process large-scale datasets with frameworks like Apache Spark and Hadoop. EMR clusters allow us to distribute computations across multiple nodes, enabling faster and more efficient data analysis.

2. Seamless Data Storage and Access with S3

We store and manage your data on AWS S3, a secure and scalable storage solution. Combined with EMR, this setup allows for quick access to large datasets while maintaining robust data integrity.

3. Event-Driven Processing with Lambda

For lightweight and event-triggered tasks, we use AWS Lambda to complement EMR workflows. This serverless approach ensures you only pay for the compute time you use, making it ideal for irregular workloads.

4. Accelerated Computations with GPU Instances

We integrate AWS GPU instances like P4 and G4 for high-performance computing tasks, such as machine learning, genomic analysis, and molecular simulations. This dramatically reduces processing times for compute-intensive applications.

5. Automation with CloudFormation and IaC

Our use of AWS CloudFormation enables automated provisioning of EMR clusters, storage, and supporting infrastructure. This Infrastructure-as-Code (IaC) approach ensures consistent, reliable setups tailored to your project needs.

6. Scalable Data Integration with Glue

AWS Glue helps us build seamless ETL pipelines, ensuring your data is clean, transformed, and ready for advanced analysis in EMR clusters or other AWS services.